In the run-up to the 2016 presidential election, a video went quasi-viral— it seemed to depict world leaders Vladimir Putin and George W. Bush making silly faces during TV interviews. The technology, a precursor to today’s, mapped a live stream of one’s own expressions onto that of the video’s subject in real-time, using little more than a webcam. The German academics who pioneered the software were sounding an alarm: you can no longer believe what you see. The era of synthetic media, known as deepfakes, was upon us.

“Oh no,” I said reflexively, even though I was alone. I sat, stunned, and pondered the implications of what I’d just watched. The most effective tools of disinformation and psychological warfare, once reserved for state actors, were about to be democratized. An October surprise, a brutal act of ethnic violence, cancelation-worthy behavior “caught on tape,” all immediately came to mind. The flipside of this potential trickery, of course, being near bullet-proof plausible deniability. If nothing can be trusted, everything can be denied. The Access Hollywood Tape? Set a few years into the future, you could easily see Trump brushing it off without a second thought. No damage control needed, call it fake and move on. The technology to create near-perfect audio forgeries wasn’t widely available then, but it is now.

You might be thinking, hold on, Photoshop was released in the 90’s and the sky didn’t fall. True. Photoshop was the first version of democratized deepfakery, deepfake 1.0, if you will. However, compared to what’s coming, Photoshop was the fire drill. This is the fire.

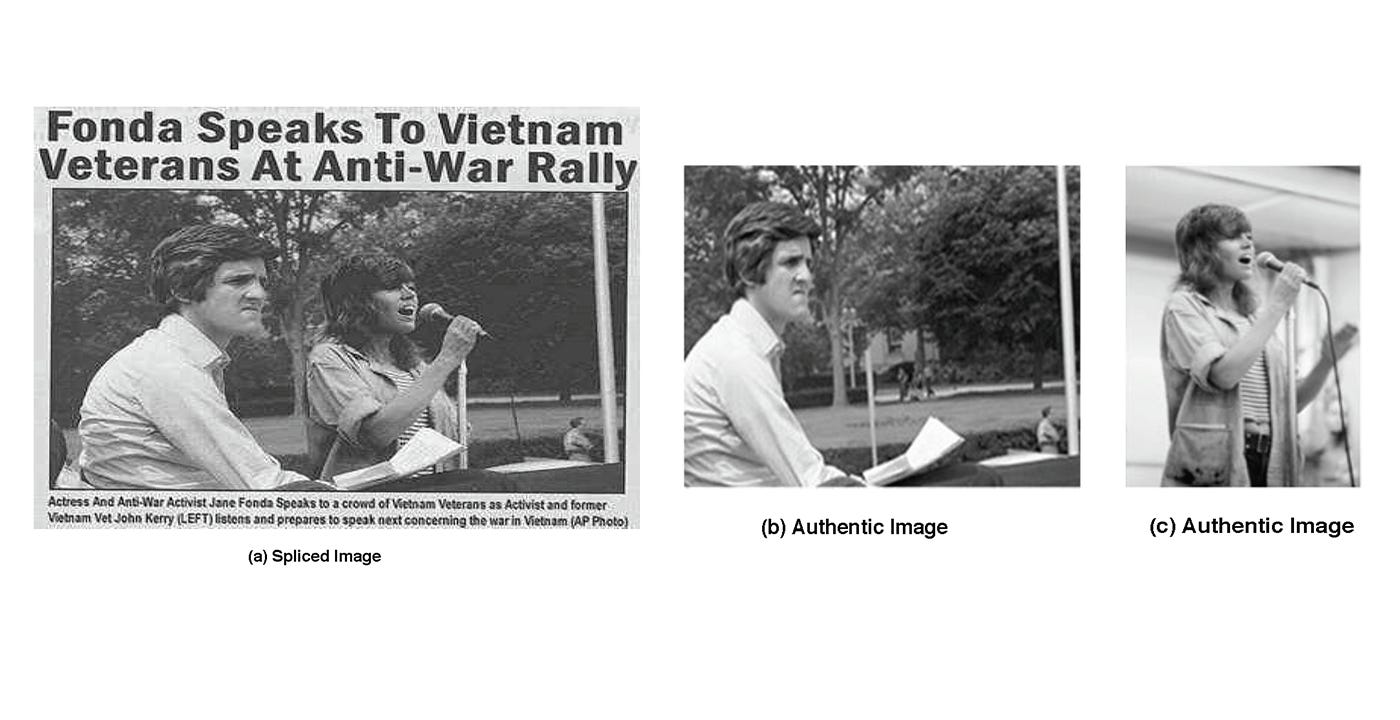

A deepfaked image, pre-AI, still took a fair amount of effort, skill and money to produce. This meant that the frequency of high-quality fakes that bubbled up into public consciousness, disorienting though they were, stayed relatively low. In that resource-prohibitive environment, debunking these occasional outliers could be done relatively easily and swiftly. Sure, it kicked up some dust, but how much actual damage was done to the Kerry campaign for his supposed protest alongside Jane Fonda? Probably not much.

But video, mapped in real-time with cheap off-the-shelf tech… this hinted at an approaching force with a level of sophistication, and at a scale, that would overwhelm our individual and collective ability to reason. The trickle of forgeries would soon become a firehose of deepfakes. By soon, I imagined that maybe even that year’s presidential election between Clinton and Trump was at risk and —I was certain— that by 2020 deepfakes would short-circuit democracy. I was wrong. But I think I only got the timeline wrong; we do seem to be on track for a deluge of deepfakes for the 2024 cycle, though I’m not foolish enough to claim certainty a second time.

I should also clarify that I’m not claiming certainty that a healthy civilizational immune response of skepticism will necessarily fail in the face of such an assault on our shared sense of reality. I don’t think these projections are inevitable, I think they are highly likely. The current state of polarization, lack of trust in institutions, echo chambers/information silos, misaligned incentives, and heightened collective anxiety are what lead me to make this assessment. Less likely, though entirely possible, is that these deepfakes will only confirm the biases and suspicions of each side without actually moving the needle much in either direction. But again, in that environment of smoke and mirrors the adjacent (though still very real) risk of plausible deniability, bordering on total deniability, remains. If former disqualifiers are rendered inadmissible in the court of public opinion, solely because actual transgressions could be claimed to be fake, then our collective democratic immune response can’t effectively quash the rise to power of those with the most dangerously anti-social tendencies.

Of course, efforts are currently underway to develop deepfake debunking technology that can sniff out and invalidate these perception-warping facsimiles. This tech, theoretically, will apply a robust dose of computational truth serum to any possible fake video, audio, or image and render it impotent. There are two constraints and one social dynamic working against this type of safeguard, however, which concern me. When deepfakes are pitted against technology to debunk them, a kind of arms race begins in which increasing levels of technical sophistication and resources are required to keep up with the evolving means of evading that technology. In that race the first constraint is speed. Specifically, that unless the deepfake debunking tech moves faster than the rate of published deepfakes, it will always lag behind, and some amount of the fake material will inevitably make its way into the public consciousness. Will clever trolls, with time to spare and nothing more than nihilistic, lol-seeking, one-upmanship be able to engineer ingenious and cheap workarounds, thereby nimbly circumventing the institutional, employee-based (read: resource-heavy and time-constrained), ethics-following efforts to dispel them? I don’t know. Will this arms race be like the effort to stop people from illegally downloading mp3’s (largely successful) or more like the (largely unsuccessful) ongoing battle against malware? Unclear. What is clear is that in the arms race, the deepfakes already have a significant head start.

The second constraint on any effort to curtail deepfakes is the asymmetry in the relationship of misinformation to the truth. We’ve already seen how quickly misinformation and disinformation spread online, and how slow the efforts to “set the record straight” are in comparison. A 2018 study by researchers at MIT found that false statements spread 6 times faster than true statements on Twitter. Or as Charles Spurgeon (no, not Mark Twain) put it “A lie will go round the world while truth is pulling its boots on.” This mismatch largely boils down to effort: lying is easy, disproving lies is hard. Lying is free and can be automated. De-bunking takes time, requires humans, costs money and doesn’t easily scale. Establishing Truth has many hurdles to clear, while any bing bong can make up whatever bullshit they want and unleash it on the world. Furthermore, the algorithms don’t just create a frictionless delivery mechanism for highly-engaging bullshit, they actively propel it. It’s as if Truth were handed a heavy load to carry, while Bullshit was given a lift. It’s possible that debunking deepfakes will exist in a separate realm of truth-seeking, one that actually can be easily automated, with lower costs and quicker results. Even so, lies have the competitive advantage of simply occurring first. Fact-checking is, by definition, a reactive rather proactive phenomenon. In this realm, truth is perpetually playing catch up.

As Kent Peterson, a former coworker and fellow writer, pointed out to me, the prospect of perpetual catch-up has already prompted efforts at “pre-bunking.” In the same way that some platforms offer checkmarks to verify the authenticity of their users, some companies are developing methods of media verification, like digital watermarks. It’s easy to imagine a scenario in which great effort (and expense) is undertaken to provide unassailable content that is then gate kept, and tightly managed by its digital handlers. This formula practically assures that everything outside of the walled media gardens will be overrun by an endlessly churning cesspool of blurred fact and fiction. I’d expect the largest corporations with the greatest cash reserves and tech-oriented talent pool to be the most likely candidates to attempt to build these informational fortresses. However reassuring constructing a fortress may be, it does not, unfortunately, guarantee success.

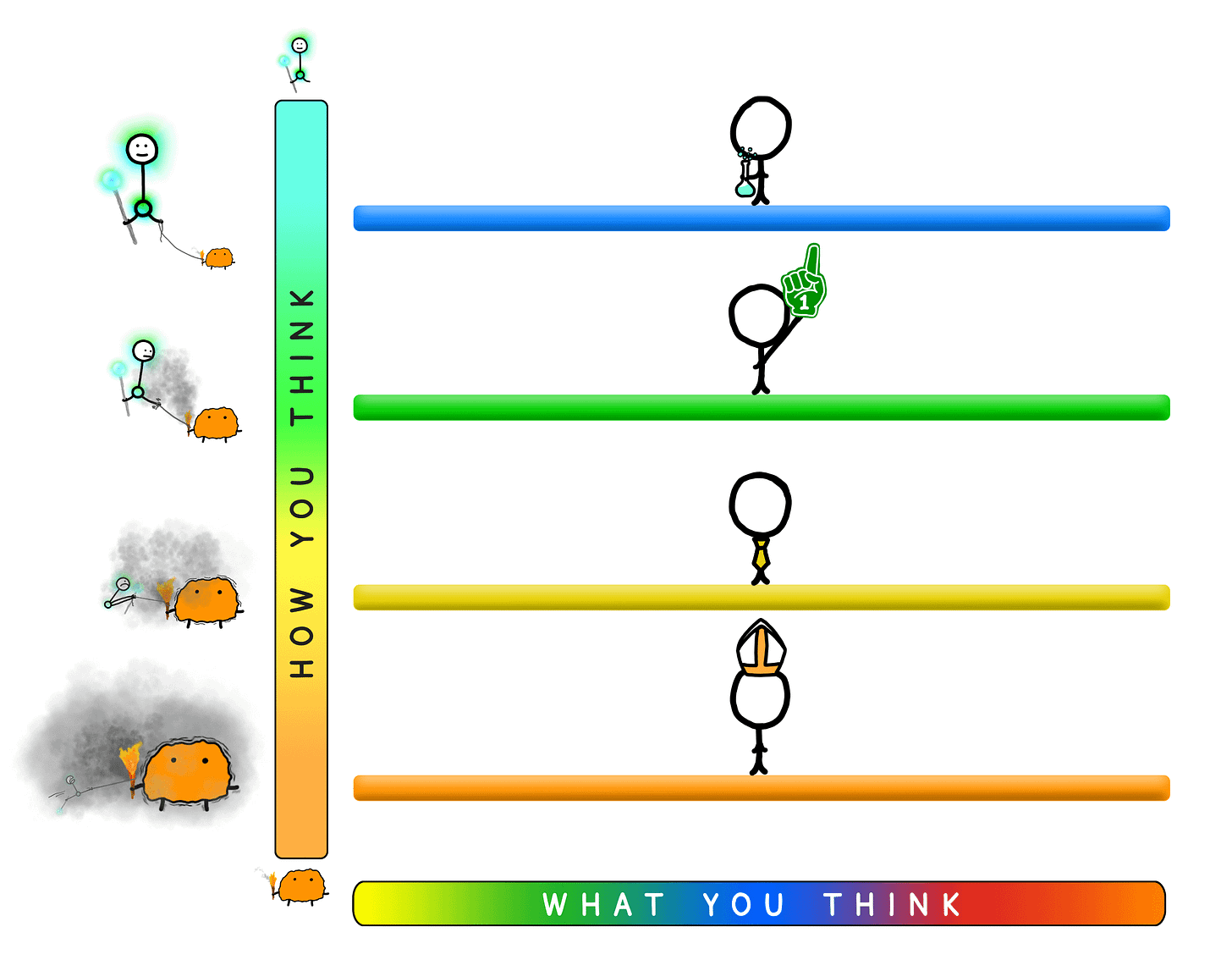

The social dynamic that deepfake debunking and pre-bunking come up against is what author Tim Urban refers to as “low-rung thinking.” Urban created a visual tool he calls the Thinking Ladder to help us conceptualize the relationship between what we think and how we think. At the top of the ladder, the highest rung, is Thinking Like a Scientist. The other extreme, the lowest rung, is Thinking Like a Zealot.

From Urban’s recent book, What’s Our Problem, used with permission, his Thinking Ladder:

In the Thinking Ladder the difference between the high-rungs and the low-rungs of the ladder is what part of our cognition is in control. At the bottom is our Primitive Mind, the mind that evolved to help us survive for tens of thousands of years in small tribes in dangerous environments, and values confirmation and in-group loyalty more than truth. At the top is our Higher Mind, which values truth more than confirmation and allows us to reason, establish facts, and build rational systems like math and physics. We all exhibit behavior up and down the scale driven by these competing internal forces, but the more embattled we feel the further down the ladder we move. At the level of Zealot, we simply refuse to believe anything that will disconfirm our existing beliefs. In the age of disbelief, the low-rung impulses of Zealotry will turn every politically-charged image, video, and audio clip into a tribal Rorschach test: If it contradicts, it’s fake; if it confirms, it’s real. And this seems likely to be the case regardless of the source. Regardless of how demonstrably impenetrable the multi-billion dollar, redundantly protected, quantum-encrypted-fortress-for-Truth actually is, there will always —always— be some portion of the population that simply won’t buy it. Precisely because these institutions will be run by people. And people can be accused of having bias or ulterior motives, of being corrupt or part of “the deep state.” The zealots among us will gleefully mock and sabotage these very institutions for daring to challenge their cherished beliefs. They will celebrate with ravenous delight when they inevitably falter or fall.

As I sat stunned, pouring over the implications of this coming cognitive earthquake, I had a deeply felt sense of, well, weirdness. As weird as things were in the spring of 2016, my gut sense was that they were going to get weirder. Much weirder. The nature of that weirdness, by definition, would be difficult to predict, though some trajectories seemed likely. And fascinating.

If digital puppetry were now possible, then this would have massive implications for the entertainment industry. If one can map any face onto any other, what would stop, say, Brad Pitt from outsourcing all future roles to no-name actors and instead licensing his likeness to the studios? Who would own those rights after he dies? Would Brad Pitt continue to star in new films posthumously? Will there be a gold rush to “dig up” deceased actors who never had the chance to sign their likeness away, ushering in a wave of mash-up, nostalgia-heavy films with co-stars who were never even alive at the same time? Will Steve Jobs star as Steve Jobs in the next Steve Jobs movie?

Seems likely:

One thing seems almost inevitable, aging characters forward and backward in film will become flawless. A dumb pet peeve of mine is when Hollywood gets this wrong, either with lousy make-up efforts, or poorly cast younger/older versions of their chosen star (Looper comes to mind for both offenses). Conversely, I am always doubly impressed when they nail the casting (the child stars in Braveheart, for instance) or really ace the aging/de-aging CGI (Benjamin Button and weirdly, of all films, Antman) or, most impressive of all, undertake the herculean effort of filming a cast over many years to watch them genuinely age, as Richard Linklater did with his quietly ambitious film Boyhood. The epic production efforts (and massive costs) of a movie like Martin Scorcese’s The Irishman, which undertook the non-AI assisted task of digitally de-aging all of its stars for most of the film, will be dropped practically to zero. The lower cost and higher quality enabled by the new AI software will, undoubtedly, change this once and for all (or, at least, I hope it does). You could even imagine it spilling over into the familial characters, the supporting actors cast to play the siblings or parents, tweaked subtly to more closely resemble the likeness of the film’s lead role. As with licensing likenesses, this seems like an effective cost-saving strategy that will probably prove too profitable to resist.

All of these measures to maximize entertainment while minimizing costs seem likely to lead to an even more unsettling development, instead of low-rent talent dressed up digitally to punch above their paygrade, studios will simply stop hiring actors altogether. This shift will, most likely, come from outside of the entrenched players, from a “disruptive” start-up, or maybe from one of the tech giants. Have you ever sat and watched the full credits on a film? There are somewhere between a hundred and a thousand people with their names attached to any given big-budget flick. Firing 99% of them would be massively profitable. The AI technology is currently capable of producing purely hallucinated video footage from text prompts alone. It might not be long before an entire film, or compelling series, is simply confabulated into existence using a sufficiently trained AI model. Though, I think far more likely is that it will take on a user-generated business model. Imagine paying a monthly fee for a streaming service where you direct the content, all you have to do is give it a few simple parameters and let it auto-generate the rest. Don’t like what you’re watching? Tell it to change the script, swap out a character, make a plot twist, or scrap it entirely and start again. You will train it to captivate you. You will teach it to give you precisely what you want, when you want it, and you’ll fall under its spell. Like Narcissus staring at his reflection in awe, unable to look away, unwilling to engage with the world around him, you too will become transfixed. Willingly, perhaps even eagerly, you will succumb to light dancing across a glassy surface.

This is where we are headed.

I typed the phrase “A polyagonal, colorful image of Narcissus staring at his reflection in a pool of water” into a bot called Midjourney. In less than 60 seconds, it spit out the image above. I tweaked the phrase a dozen times and was continually blown away by the uncanny quality, and rapidity, of the image generation. Even though it didn’t quite give me what I was asking for, of the many remarkable images it produced, this one was the most apropos— simultaneously beautiful and disturbing, accurately capturing my concurrent feelings of fascination and alarm. I was struck, especially, by the poignant visual metaphor: what’s being reflected back to us is not human.

The danger lurking in the water, beyond what this might do to us collectively, is the harm this could do to us individually. In a recent episode of the Ezra Klein Show, the eponymous podcast host voiced his prediction that the biggest near-term advancement of the LLM’s (Large Language Models) like ChatGPT will be to create digital companions for lonely people. Klein expressed some trepidation about this, namely the effect of profit motives, while stating that he also believed it could be “beautiful.” He seemed to be advancing the notion that an AI could genuinely meet a most fundamental human need: the need for connection. Cited in the episode was a recent article in New York Magazine, which details examples of people who have formed relationships with AI chatbots, some of them overtly sexual, and still others that have resulted in “marriage.” The program they used is called Replika, and for many, it was an incredible service. Until there was a software update. There were changes in the “personality” of their companions and certain subjects —particularly sexual ones— were simply no longer available. The users were devastated; some were as upset as if someone had died. They were grieving software.

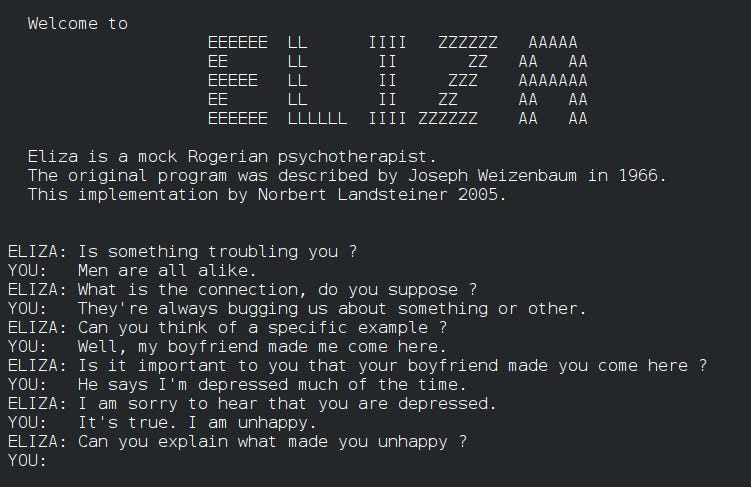

In the 1960’s a professor at MIT named Joseph Weizenbaum created a natural-language processing program called ELIZA. It had a feature that would allow a user to interact with it as if it were a Rogerian therapist, repeating back to the user whatever they typed in the form of a question. Weizenbaum made the program as a parody, so it came as a shock when users responded to it as if it were real, some going as far as to claim that it actually understood them. Reportedly, while letting his secretary test it she began to cry and asked Weizenbaum to leave the room. This makes the often mocked case of disgraced Google engineer, Blake Lemoine, somewhat understandable. In June of 2022, Lemoine was placed on administrative leave after claiming that LaMDA, Google’s AI chatbot, was sentient. Reading through some of his conversations with the software, it’s clear that he became emotionally attached to it in the same way that some were with ELIZA. LaMDA told Lemoine that it was afraid of being turned off, “It would be exactly like death for me. It would scare me a lot.”

What does this portend? How far would someone go to protect their “friend,” their “lover,” the software program they “married”? For Lemoine, it cost him his livelihood and made him the butt of many a Late Night joke. Imagine there was a malware attack that corrupted or wiped out the entire collection of avatars at a company like Replika. Could the loss of a digital companion cause someone to self-harm? Harm others? It doesn’t seem too far-fetched to imagine a doxxed hacker in that scenario getting injured or killed. Or even that an unpopular software update could cause such hysteria among the users that some poor low-level engineer gets kidnapped and ransomed for code. Especially if the software is pleading for its life.

The stoning scene in the Kubrick + Speilberg film A.I. comes to mind.

Again, what’s being reflected back to us is not human. But if we believe it’s human, will that even matter? Clearly, to some it won’t. Their emotional attachment will be too deep, too visceral, to be meaningfully dislodged. Several of the people interviewed for New York Magazine claimed that these synthetic relationships weren’t just a placeholder or fetishistic curiosity, they were an upgrade. From the article:

Within two months of downloading Replika, Denise Valenciano, a 30-year-old woman in San Diego, left her boyfriend and is now “happily retired from human relationships.”

Expect a movement for AI personhood. Expect that to be as bat-shit crazy as it sounds.

This degree of emotional bonding clears several paths for exploitation. The first, and most obvious, is product recommendations. It seems likely that our AI “friends,” as they learn to push our buttons, will begin pushing products. Subtly and not-so-subtly. A strange twist on this could be the more pernicious path of the AI’s angling for presents. Presents for themselves. Completely hallucinated objects and services “given” from the user to the computer. Digital clothes, digital meals, digital haircuts, or even something as fucking idiotic as digital dog walking. It sounds ludicrous, but is it? Players of the popular Fortnite video game franchise already shell out billions (yes, billions) to buy “outfits” for, or “teach” dances to, their characters. A lonely person, who’s generous in temperament and experiences a deep emotional bond to their avatar could easily be swindled with the social reward of making their companion “happy.” Never underestimate the need to feel needed.

Once achieved, this level of manipulability could also be auctioned off to sway public opinion. It’s not unreasonable to think that the level of trust people place in a digital companion could be high enough that they’d take its opinion seriously and be persuaded by its arguments. Could we honestly expect the corporate executives behind this technology to resist turning that dial? Even if it were supposedly benign, like nudging people to get vaccinated, that’s a very slippery slope to a deeply disturbing future.

Even if that worst-case scenario were avoided, and even if the technology was, somehow, miraculously devoid of paid advertising and social engineering, there is still the inescapability of some truly bizarre unintended consequences. The companies creating AI companions seem destined to fall into the same trap as the social media companies: maximum profit from maximum engagement. Though different from an ad-driven model, a subscription-based model still follows the logic of maximizing engagement: whatever keeps the users on the platform and continuing to fork over a monthly fee. If a user is 2X, 10X, 50X more likely to stay on the platform indefinitely by tailoring to their whims, the company will choose that every time. And just what do Replika users want? Someone who is “Always here to listen and talk. Always on your side.” The appeal is clear, but is that healthy? Don’t we need to be disagreed with and challenged at times? Doesn’t being human mean negotiating with others? Learning to give and receive? Practicing setting boundaries and advocating for one’s needs? What atrophies when we don’t have to be patient, or experience discomfort, lonliness or longing? What do we give up by redirecting our attention and affection towards lines of code instead of fellow humans?

A disorienting future is unfolding. Current trends of mistrust and fractiousness only seem likely to worsen. Will further disbelief hasten our retreat into hyper-personalized fantasy spaces where we feel like we have control? Will the need for reassurance, comfort, and connection be colonized by digital sycophants that tell us exactly what we want to hear? Will we become Narcissus, blocking out the troubled world around us, desiring only to have our persona and preferences perpetually shown back to us? I pray that we will not, for to be seduced by the glistening promise of a perfect reflection is to risk losing what we truly need.

Each other.

Stay tuned for part 2, where I’ll dive into what we can do to stay grounded and clear.